When 90% of AI Agents Fail: What Zendesk’s Team Learned Building the Real Thing

Based on a talk by Mirza Beširović at ProductLab Conference 2025 [Slides included]

At ProductLab Conference 2025, Mirza Beširović, AI Product Leader at Zendesk, shared what their team has learned building and scaling AI agents in real production environments — where systems need to withstand high interaction volumes, unpredictable customer behavior, and real business stakes.

This post summarizes Mirza’s talk. Quotes are from the session, but the wording and commentary are mine.

Mirza stood on stage and asked a simple question:

“Raise your hand if you’ve built an AI agent in the past six months.”

About half the room raised their hands.

“Keep it up if it actually worked the way you wanted it to work.”

Half the hands went down.

“Keep it up if you’re still using it six months later.”

Only about 10% remained.

The room got quiet. That uncomfortable kind of quiet when someone just said the thing everyone was thinking but nobody wanted to admit.

Mirza leads Zendesk’s AI agents platform. They process millions of customer service interactions, dealing with handoffs that fail, latency that kills trust, agents that break.

But they’re figuring it out at scale. What Mirza shared wasn’t theory. It was battle scars.

“You’re not alone. We’re all still learning and experimenting. Most of us are still figuring out how this is working.”

That honesty? That’s what made the room lean in.

The Job Has Already Changed (We Just Don’t Know It Yet)

“Most PMs don’t realize that the product management job has already changed drastically and fundamentally. We’re all still kind of catching up with it.”

That’s what Mirza opened with. And I felt it land in the room.

We’re still writing PRDs for probabilistic systems. We’re still running sprints for research that takes either 6 days or 6 months. We’re still planning features when we should be orchestrating capabilities.

The tools broke. The methods broke. And we’re all pretending they still work.

Three Tensions Breaking Everything We Know

Mirza identified three fundamental tensions emerging that are breaking traditional product management:

1. The Control Paradox

Users tell you they want control. They want to “train the bot.” They want to influence how the system behaves.

But they absolutely don’t want any complexity. They don’t actually want to know how it works.

Real example from Zendesk: A customer writes an instruction: “When a customer says X, always escalate to a human agent.”

The very next instruction they write? “But resolve everything autonomously.”

This isn’t stupidity. This is humans being human.

“Most users want the illusion of control, not actual system effectiveness.”

We want the illusion of control, not actual system effectiveness.

The solution isn’t more control panels or more settings. It’s designing touch points that preserve user agency without breaking the underlying system.

What this looks like in practice:

Binary signals where human judgment actually matters (thumbs up/down, but contextualized)

Evaluation layers that aggregate feedback before modifying behavior

Three-layered architecture: big picture analytics, drill-down capability, and qualitative feedback with conflict detection

Here’s the insight that stuck with me: Users don’t want control—they want transparency. They want to understand how the system is performing and why it’s doing something. It’s a trust-building exercise, not a configuration challenge.

2. The Orchestration Trap

Specialized agents perform better. A focused agent that handles order cancellations will outperform a general chatbot every time.

But here’s the trap: More specialization = more agents = more handoffs = more latency = more failure points.

When Zendesk handles a simple customer query like “cancel my order,” it’s not one agent. It’s a choreographed dance:

Agent detects intent

Agent checks knowledge base

Agent makes API call to retrieve data

Agents validate context handoff

Agent generates response

Agent evaluates response quality

LLM judges overall conversation success

Seven agents. One query.

And users expect sub-second responses because that’s how humans communicate.

“Humans have very low latency. AI agents don’t. The number one problem we all have is latency, latency, latency.”

That gap? That’s what breaks trust.

This is the shift: You’re not building product interfaces anymore. You’re creating digital workspaces where AI agents collaborate to solve human problems.

“We’re creating digital workspaces for AI agents to collaborate with one another. Think Second Life, but agentic.”

Mirza called it “Second Life, but agentic”—specialized agents with clear roles, capabilities, and purposes, passing context between each other.

We’re designing the space where agents work, not just the output they produce.

3. The Evolution Crisis

Traditional planning methods are failing for AI products.

AI research cycles don’t fit into deterministic PRDs. Things can take 6 days or 6 months—it’s the nature of probabilistic systems.

“You can’t just write an upfront spec. I mean, you never should have been writing an upfront spec anyway, but now you really can’t with an AI system.”

You can’t write an upfront spec. (And honestly, you never should have been doing that anyway.)

This is causing teams to fragment along “AI” vs “traditional” lines.

And it raises an uncomfortable question: Should teams building AI products stop building user-facing features? Should autonomous agents be building the features instead?

Mirza didn’t answer that. He just asked it. And it hung in the air.

Because that’s the future we’re walking into, whether we’re ready or not.

What’s Actually Changing in the PM Toolkit

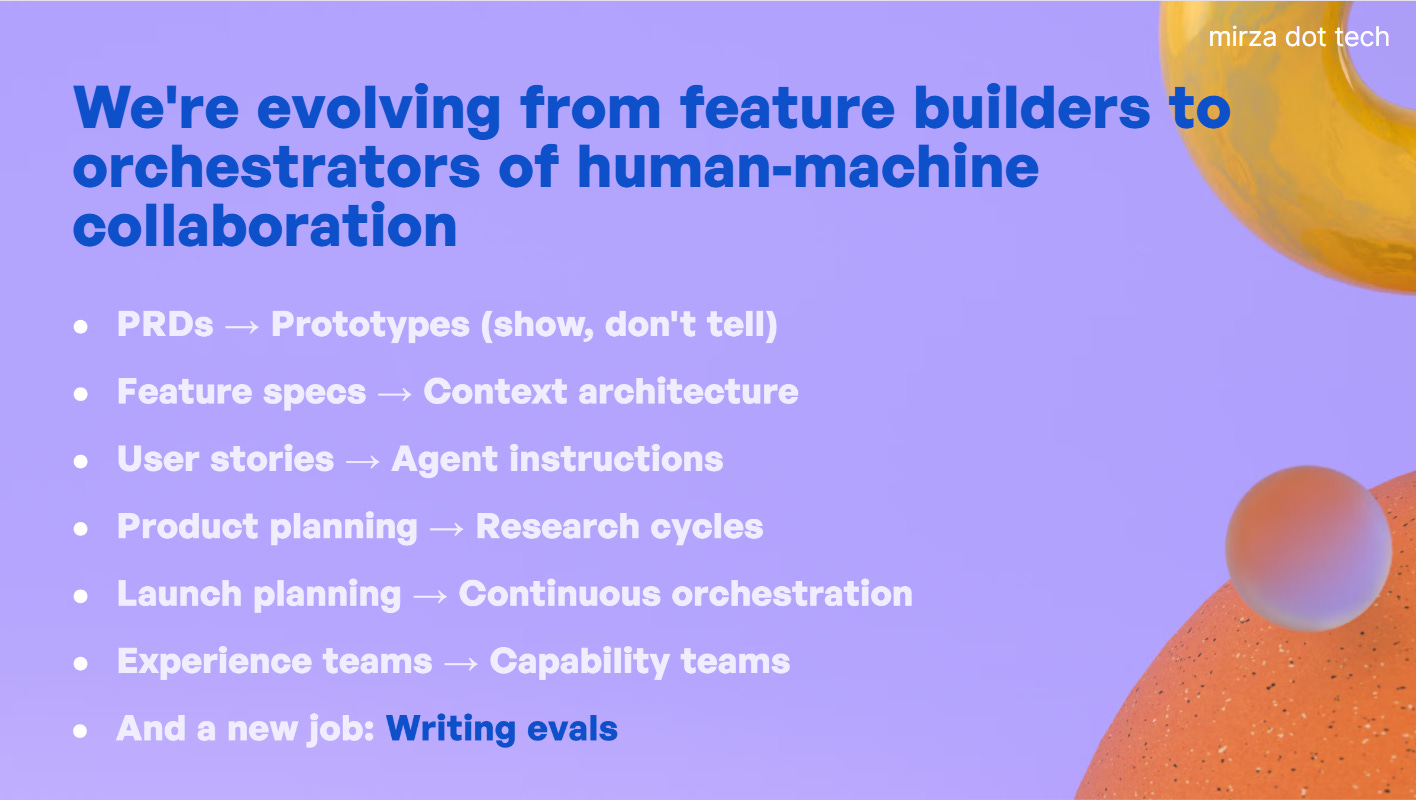

The evolution from feature builder to orchestrator is happening now:

PRDs → Prototypes (show, don’t tell)

Feature specs → Context architecture (prompts, agent instructions, context engineering)

User stories → Agent instructions (what should the agent do in this system?)

Product planning → Research cycles (hypothesis testing, not roadmap commitments)

Launch planning → Continuous orchestration (how agents interact and evolve)

Experience teams → Capability teams (building what agents can do)

And then there’s the new skill that will define PM interviews in 2026:

Evals.

Frameworks that measure AI system performance—metrics, scorers, evaluation datasets.

“The one skill I promise you everyone will be testing for in job interviews in the next year or so will be about evals. So learn evals.”

Mirza’s prediction: If you’re not learning this now, you’re already behind.

The New PM: Builder + Orchestrator

The PMs who will thrive aren’t just strategists. They’re:

Builders:

Creating agents for themselves (personal experiments)

Prototyping relentlessly (not writing specs)

Designing agent interaction patterns

Understanding what breaks and why

Orchestrators:

Architecting how humans and machines collaborate

Deploying agents to solve business outcomes

Managing context flow between systems

Creating transparency and explainability

Does everyone need to become a coder? No. Strategy and market understanding remain critical.

But the ability to prototype, to experiment, to show instead of describe—that’s the new table stakes.

Your Homework This Week

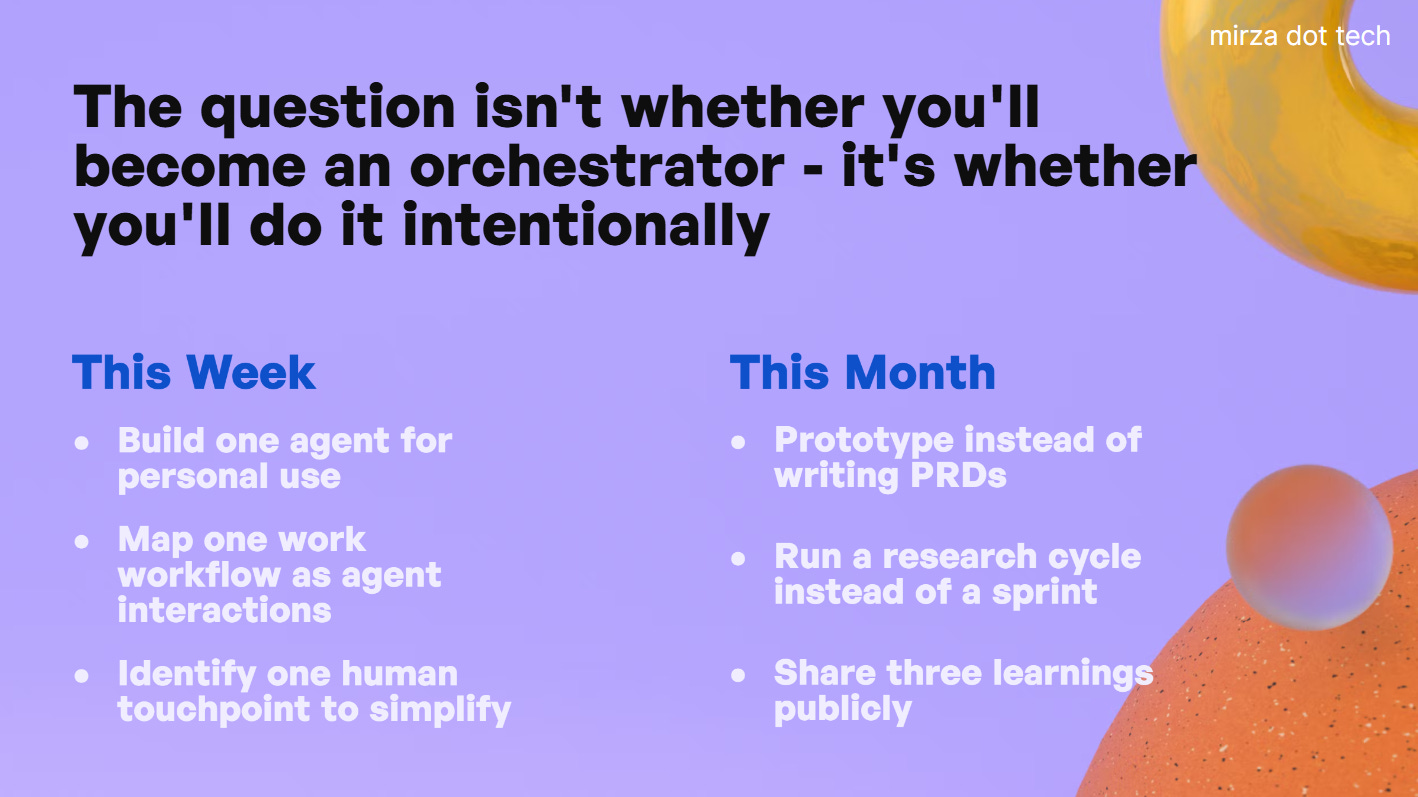

Mirza ended with a challenge:

“The question isn’t whether you’ll become an orchestrator, it’s whether you’ll do it intentionally.”

Here’s what he wants you to do:

Build one agent. Use Zapier, ChatGPT, whatever. Map one of your workflows (personal or work) as an agent interaction.

Identify one human touchpoint where you could simplify the experience.

Prototype instead of writing a PRD. Try running a research cycle instead of a sprint.

Share your learnings publicly. Create social proof. Document what breaks and why.

This is how you break into an AI PM role even if you don’t have one yet.

What This Means For Us

I’m sitting here at The Social Hub Berlin on a rainy Monday, and I keep thinking about that 10% stat.

Only 10% of agents are still being used six months later.

That’s not because the technology isn’t ready. It’s because we’re still applying old methods to a new paradigm.

We’re trying to plan the unplannable. Control the uncontrollable. Simplify the irreducibly complex.

And the teams that figure this out first? They’re not writing better PRDs. They’re not running tighter sprints.

They’re prototyping. They’re building evals. They’re designing for orchestration, not features.

They’re becoming the orchestrators Mirza described.

The Uncomfortable Truth

Here’s what Mirza said that I keep coming back to:

“None of us have all this figured out. But community is the competitive advantage that we as product people have.”

This is why ProductLab exists. These conversations—honest ones about what’s breaking and what’s working—are how we collectively figure this out.

Because the PM job has already changed.

The question is whether you’ll lead that change or react to it.

—Daniele

P.S. Mirza runs a newsletter for aspiring product leaders at You Are The Product. If you’re serious about breaking into AI PM, subscribe. He’s documenting the journey in real-time. Connect with him on LinkedIn.